Beyond Moore’s Law: How Computing Progressed and Where It Goes Next

by Scott

Moore’s Law is one of the most famous observations in the history of technology, not because it was a law of physics, but because of how uncannily accurate it proved to be for decades. First articulated in the mid-1960s, it described a simple idea: the number of transistors on an integrated circuit would roughly double at regular intervals, originally every year and later refined to about every two years. What began as an industry observation became a guiding principle that shaped how the entire semiconductor world planned, invested, and innovated.

In the early years of computing, progress was slow and hardware was physically large, expensive, and fragile. Computers relied on vacuum tubes, which consumed enormous amounts of power and failed frequently. The invention of the transistor in the late 1940s marked a turning point. Transistors were smaller, more reliable, and vastly more energy efficient. By the time integrated circuits emerged in the late 1950s and early 1960s, it became possible to place multiple transistors onto a single piece of semiconductor material, opening the door to rapid miniaturization.

Gordon Moore, a chemist and engineer, noticed a pattern while working in the semiconductor industry. In 1965, he observed that the number of components that could be economically placed on a chip was increasing exponentially. He predicted this trend would continue, driven by advances in manufacturing techniques and design efficiency. Moore did not frame this as a guarantee, but rather as an expectation based on current momentum. Few could have imagined just how accurate his observation would become.

Throughout the 1970s and 1980s, Moore’s Law held remarkably well. As photolithography improved, engineers could etch finer and finer features onto silicon wafers. Transistors became smaller, cheaper, and faster. Each generation of chips delivered more computing power at roughly the same cost, enabling personal computers, early networking, and eventually graphical user interfaces. The steady rhythm of improvement created a sense of inevitability, as if computing power were on an unstoppable trajectory.

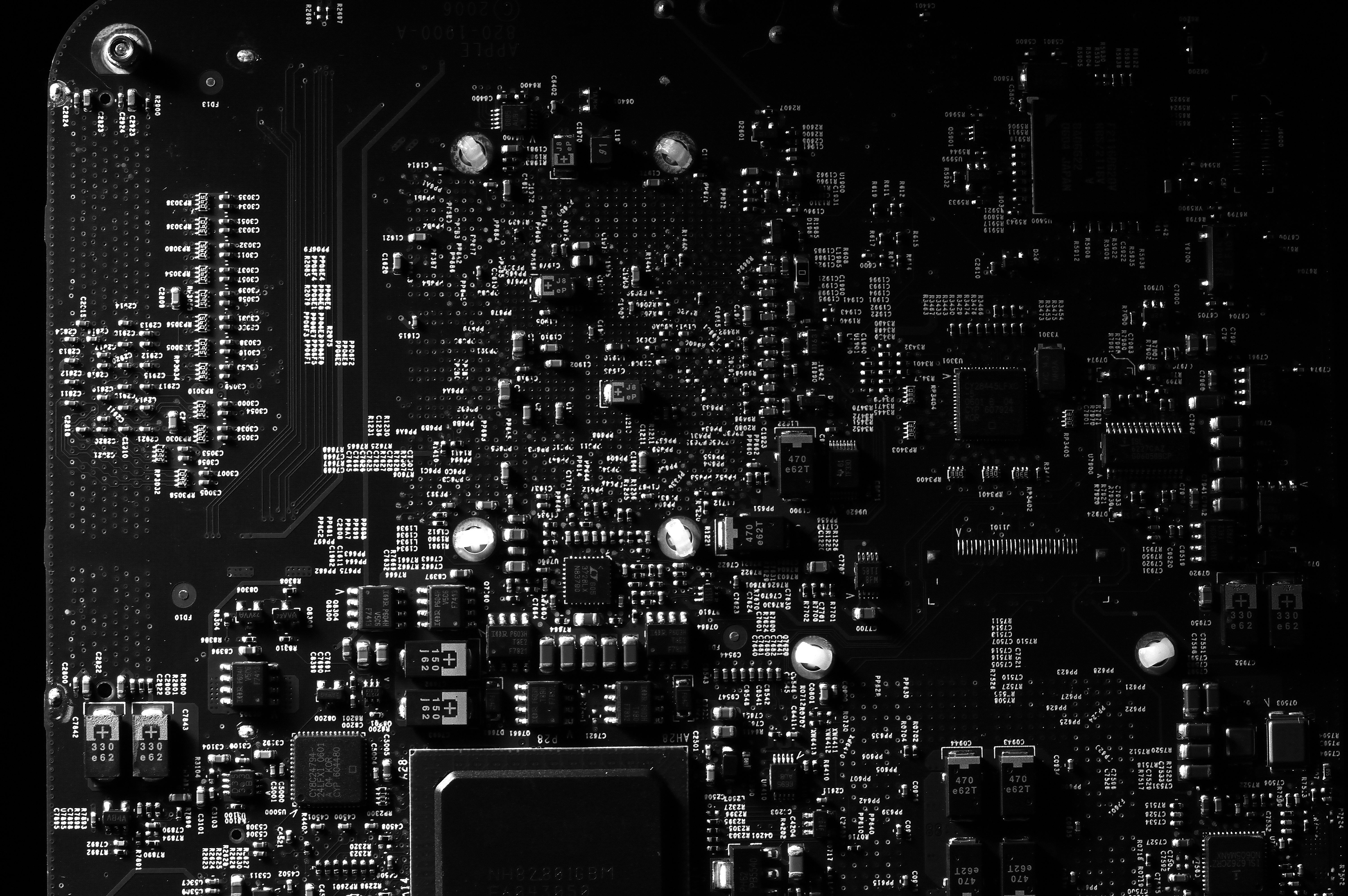

To understand why transistor counts followed this trend, it helps to understand what a transistor actually is. A transistor is an electronic switch that controls the flow of electrical current. It can be turned on or off, representing the binary states of 1 and 0 that underpin digital computing. Inside a processor, billions of transistors work together to perform calculations, move data, and make decisions. More transistors generally mean more capability, whether that is higher performance, lower power consumption, or both.

Transistors are built using semiconductors, materials that sit between conductors and insulators in terms of electrical behavior. Silicon became the dominant semiconductor because it is abundant, stable, and well-suited to precise manufacturing. By carefully adding impurities to silicon, engineers can control how electricity flows through it. This ability to engineer electrical behavior at microscopic scales is what makes integrated circuits possible.

Over time, semiconductors found uses far beyond computing. They power radios, televisions, medical equipment, vehicles, industrial machinery, and nearly every modern electronic device. As Moore’s Law progressed, semiconductors became smaller and cheaper, allowing computing to spread into everyday objects. This gave rise to smartphones, embedded systems, and the interconnected devices that now define modern life.

By the early 2000s, the industry began to face physical challenges. Transistors were approaching sizes measured in nanometers, only a few dozen atoms across. At these scales, heat dissipation, electrical leakage, and quantum effects became significant problems. While Moore’s Law continued, it did so with increasing difficulty and cost. The doubling of transistor counts still happened, but no longer delivered the same performance gains as before.

In recent years, Moore’s Law has shifted from being a strict prediction to more of a guiding philosophy. Transistor counts still increase, but at a slower pace and with more complex designs. Instead of relying solely on shrinking transistors, engineers use techniques like multi-core processors, specialized accelerators, and advanced packaging. Progress continues, but it no longer feels effortless.

Looking ahead, the future of computing may depend on materials and approaches beyond traditional silicon. Graphene has attracted attention for its exceptional electrical properties, potentially allowing faster and more efficient devices. Photonic chips aim to use light instead of electricity to move data, reducing heat and increasing speed. Bio-inspired chips explore how principles from the brain could lead to more efficient computing architectures.

Quantum computing represents another frontier that was once considered science fiction. Today, functional quantum processors exist, even if they are still experimental and limited. Unlike classical computers, which rely on binary transistors, quantum computers use quantum states to perform certain calculations far more efficiently. While they are unlikely to replace traditional processors for everyday tasks, they offer new possibilities that extend beyond Moore’s Law entirely.

Moore’s Law has been successful not because it was inevitable, but because the industry believed in it. Engineers, companies, and researchers aligned their efforts around a shared expectation of progress. That collective belief helped drive innovation, investment, and collaboration on a global scale. Even as the original formulation becomes less precise, its influence remains deeply embedded in how technology evolves.

In the end, Moore’s Law was never just about transistor counts. It was about momentum, ambition, and the human drive to build better tools. As computing moves into new materials, new architectures, and entirely new paradigms, the spirit of Moore’s Law lives on. Progress may look different in the future, but the pursuit of more powerful, efficient, and transformative technology continues, just as it did when Gordon Moore first noticed a simple pattern and dared to imagine where it might lead.